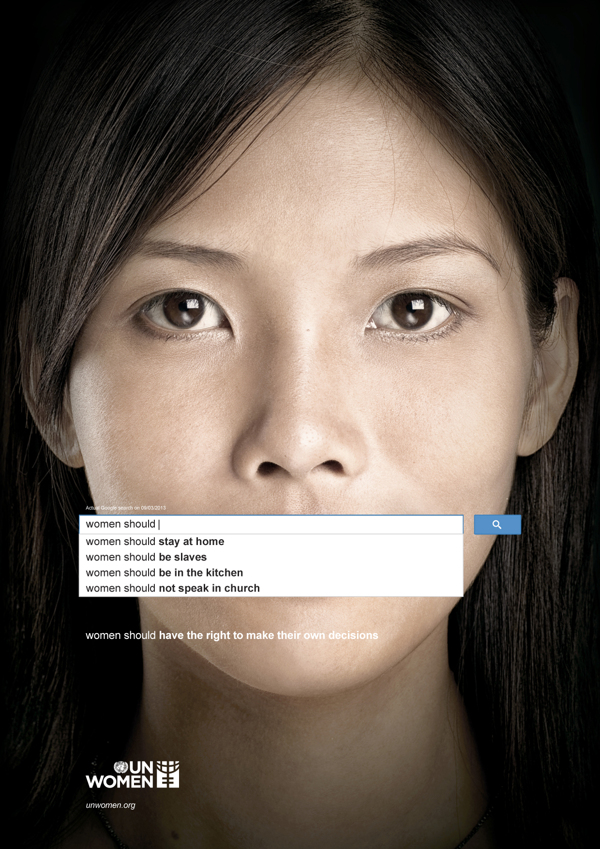

In light of the recent awareness campaign spread across the web by UN Women, revealing the prevalence of discrimination and sexism against women, it is natural to take a closer look at the Google autocomplete algorithm and how it affects the way we think search.

Google autocomplete algorithm is questionable.

Trending on Twitter for a while, the hashtag #womenshould, behind the very popular awareness campaign from UN Women, cast doubt on the Google autocomplete algorithm.

For those who are not familiar with Google autocomplete, it is a function that generates suggestions when you start typing a query in the search box based on its popularity.

Even though the autocomplete function has been there for almost nine years, no one really dared to admit that this was an issue, until now. The UN campaign has been very successful in making people more aware of sexism in our world.

So, what does Google have to say about that?

“We believe that Google should not be held liable for terms that appear in Autocomplete as these are predicted by computer algorithms based on searches from previous users, not by Google itself,” the company said.

Google seems to blame it on its complex algorithm that tends to always perpetuate negative stereotypes. Perhaps it is time to acknowledge a certain degree of ethical responsibility and continue to develop and teach more formalised methods.

What else do we learn from the Google autocomplete system?

Sexism in the worldwide web isn’t the only concern raised recently. Google actually closes its eyes on many controversial topics such as race, religion, nationalities and other highly stereotyped subjects.

A Harvard study published earlier this year demonstrates that black names are 25% more likely to bring up adverts related to criminality. This creates an issue because it is up to advertisers to decide which keywords they want to choose to trigger their ads.

Similarly, religions are also easy targets because of their historical background. Regardless of whether you type in “muslims”, “jews” or “christians”, it will always display hate-related suggestions that Google doesn’t seem to consider as protected groups.

Nationalities aren’t spared either. We can learn that American’s are lazy, fat, ignorant and weird, whereas French people are rude, skinny, gay and dark. Lovely!

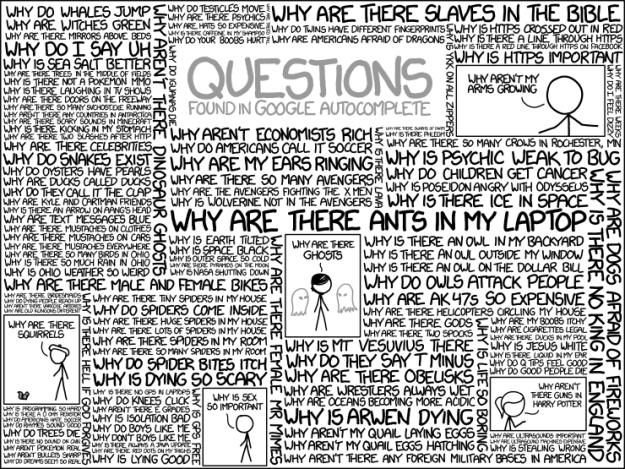

On a lighter note, the Google auto-complete algorithm also brings entertaining, comic and unexpected search suggestions like:

“What would a chair look like if your knees bent the other way” or “If I ate myself, would I be twice as big or disappear completely?”

I’ll just give you a moment to think.

If you have a spare five minutes, I suggest you head over to this collection of autocomplete search results.

What can we expect from Google in the future?

Google already had to take action in the past about a German businessman that filed a lawsuit over its auto-complete searches where his name was associated with scientology and fraud. This summer, the same case happened to a Japanese man that was wrongly linked to criminal activities. The list goes on and on. These rumours can irretrievably damage someones life and it’s indirectly Googles responsibility to protect an individuals privacy by avoiding such unverified suggestions on top of the search results. It is obviously a long-term process that must be established and we could expect to see in the future a “report” button for example, as they established on Youtube, to down rank deceptive content.

Additionally, in terms of blocking hate and violence suggestions, Google created protected groups that remove automatically harmful content regarding ethnic origin, colour, religion, sex, etc. However, most queries seen above seem to go through the Google barrier and this is a matter that has to be investigated to suggest more ethical content in the future.

The last Google algorithm update, Hummingbird, designed to tackle more complex queries isn’t going to change things. Google did put a lot of effort into improving its search results to make it faster and more precise but didn’t modify its auto-complete algorithm nor updated its protected groups. It doesn’t seem that it’s Googles priority right now.