In the months since Google’s Head of Webspam, Matt Cutts, announced the roll-out of the much anticipated Penguin 2.0 update, how have the SERPs been effected? In the immediate aftermath of the update the majority of SEO experts and webmasters seemed to have been left somewhat perplexed and even slightly underwhelmed by the initial results.

The overall feeling was that we would see a gradual change throughout the course of summer, and indeed, a recent poll conducted by SEO Roundtable found that 50% of readers had been hit by Penguin 2.0, with 65% being unable to recover. As the dust settles, I thought it important to assess whether or not the most common predictions SEOs made in the run up to Penguin 2.0 have proved to be accurate.

Authoritative, niche content being rewarded with higher Page Rank

Firstly, there was evidence to suggest that websites would be lowered in Google results if they featured content of a considerably wide and diverse subject matter.

So for example, a directory or a mediocre at best online magazine covering everything from nail art trends to advice on cheap holidays, to domestic upkeep tips, with little to no social interaction would be in danger of being hit.

Google crawlers have begun to look at very specific niches, rewarding sites that have very clear subject knowledge in the sought area, from authoritative, influential industry professionals related to that field.

Therefore, Google searches and queries about a specific niche will turn up those niche websites post-Penguin 2.0.

For content marketers, this now means that identifying the best authorities in their specific field via their digital footprints on social media and other websites, and then collaborating with them to provide excellent articles or advice, could ensure good positions in the SERPs for the rest of 2013.

Guest posting

Guest posting caused the biggest panic for SEOs and content marketers pre-Penguin. It was predicted that webmasters who had previously written content to be hosted externally from their own sites would be penalised for this technique.

However, so far, it hasn’t caused the impact that many people feared. As long as guest posts aren’t used to manipulate the way in which Page Rank is passed, and still consist of natural, relevant and authoritative content, hosted on high-quality websites, Penguin has waved them through with a nod of the head.

In order to implement a successful guest post on an external site to boost your rankings, it is now important to do the following:

- Think narrowly: Following on from the first point on relevant niche sites, you must now consider how relevant the external site is to your niche? The more narrow their subject focus, and the more relevant your post is to their focus, the better.

- Try to forget about building links: I’m not just talking about the age-old Search Laboratory motto of don’t build links, earn them! This is of course still key. However its also important to remember that brand visibility and credibility within the niche are also critical.

- Dont be selfish: When writing content to be hosted on an external site, include links to other reliable articles across the web too, in order to add validity and provide genuinely useful resources for your reader.

Spam Reporting tool

A sign that the shift in SERPs may be gradual over much of 2013 is the new Spam Report tool that was introduced on May 21st.

A lot of SEOs have been left confounded by the fact that some badly optimized pages slipped through the net, and are still high in the Google rankings. But we could see more of them drop now that we can report spam by filling out a simple form.

So has the Spam Report tool had an impact so far? Have people been using it? Cutts certainly placed enough emphasis on this new tool in May, but it may still be too early to discern whether it has had an impact on the SERPs. It is likely to have an impression on the next big Google update.

Direct match anchor text

Googles crawlers aren’t just searching homepages for wrongdoings such as direct match anchor text: they’re now believed to be penetrating to the deepest pages, after Cutts claimed prior to the roll-out that Penguin 2.0 would go deeper than the last.

Some of the early Penguin 2.0 outcasts who made up the 2.3% of the initial casualties indeed had over twice as many direct match, or money making keywords on their sites as competitors at the time that Peguin 2.0 was rolled out.

However, many SEOs believe that until we see solid, published proof of a drop in the traffic of these sites, they could still be ranking well for other keywords.

Lower tolerance of poor-quality links

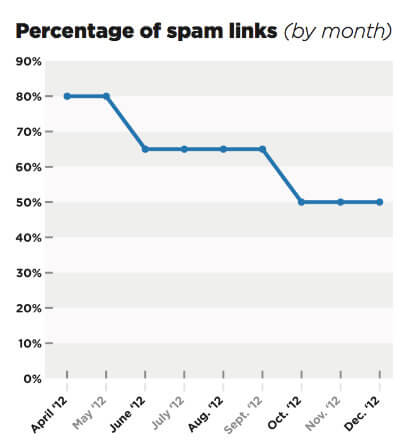

Back in March, Barry Schwartz of Search Engine Land predicted that the threshold for sub-par inbound links would shrink. This prediction was correct.

Whereas the threshold for sub-par links, such as links on directories used to be 80%, the tolerance is now down to about 50%. What this means is that if 50% or more of the links pointing towards your site are questionable, you could see a significant drop in rankings and the threshold keeps getting smaller.

And what does this all mean for SEOs?

There had been panic in the SEO industry in the build up to the Penguin rollout; perhaps understandably, after the impact of the last in 2012. But businesses and SEOs should be optimistic, not fearful. Instead of seeing updates as a penalty, they should be viewed as a reward to those who create well written, useful and engaging content with the consumer in mind, supporting optimisation that adheres to the Google guidelines, not manipulates them.