We know that duplicate content is bad for SEO and that the rankings of a website can be harmed if it features duplicate content. However, sometimes sites are so large that it’s often a difficult task to both identify and remove/solve any instances of site-wide duplicate content. While the use of Canonical Tags can often achieve desired results, it can sometimes be difficult (especially for large sites) to implement this as a site-wide solution. Often, it can be quicker and easier to simply block certain pages or directories which we don’t require to rank from being crawled by search engines using the robots.txt file.

Disclaimer: Before editing your robots.txt file, remember than even small changes can be very costly when it comes to blocking crawler access to areas of your site. Before blocking pages, you should always determine how much traffic they are receiving as Organic landing pages within your Analytics data. As always – research heavily beforehand!

There are a number of common situations in which you might find your site has been affected by duplicate content. Some of these have been outlined below:

1) Where sites serve content through both <>http:// and a secure http:// page variant.

Eg. https://www.searchlaboratory.com/ and https://www.searchlaboratory.com/

This in effect leads to a situation in which search engines can crawl and index two identical sites. While it’s likely that Google’s algorithm is built to understand that some sites may have encrypted duplicates, it’s always better to be safe than sorry! By blocking one version within robots.txt, we can ensure consistency in that only one version of the site is indexed.

To achieve this, we need to place a separate robots.txt file on each domain version – one for the http:// version and one for the https:// version. This will completely block all pages on the https:// version.

http:// version

User-agent: *

Disallow:

https:// version

User-agent: *

Disallow: /

2) Where a site has a formatted version of an existing HTML page – which is specifically tailored for printing or as a PDF version.

Eg. https://www.searchlaboratory.com/blog-post/ and https://www.searchlaboratory.com/blog-post/webprint/

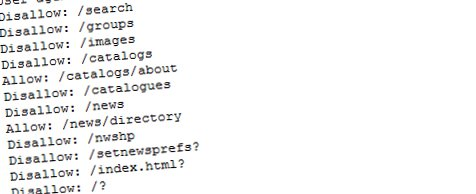

These pages are not required to rank as landing pages and they are often a source of many duplicate content issues. They can usually be blocked using a wildcard operator (an asterisk) within robots.txt. A wildcard operator can stand for any number of any characters. We can therefore use it to identify any URL which features a conventional term such as ‘/webprint’ or which ends in ‘pdf’.

User-agent: *

Disallow:*/webprint

Disallow:*pdf$

Note the use of the Dollar sign in the robots statement above – this specifies that any blocked URL’s must end after the string ‘pdf’. For example; the following URL would not be blocked even though it contains the string ‘pdf’:https://www.searchlaboratory.com/are-pdf-files-good-for-seo/ – while this URL would be blocked:Â https://www.searchlaboratory.com/blog-post.pdf.

3) Where a CMS error has led to multiple versions of the same pages which have the same URL’s, but with different letter casing.

Eg. https://www.searchlaboratory.com/blog-post-one/ and https://www.searchlaboratory.com/blog-post-ONE/

Sometimes faulty Content Management Systems can create multiple versions of the same page. These can often have identical URL’s but with different letter casing. If, say, all instances of duplicate pages featured upper-case lettering, we could block all of these from being crawled by search engines in one fell swoop. Because search crawlers are case-sensitive, we can order them to ignore these duplicate page versions by blocking all URL’s which feature upper-casing. We might then need to ‘allow’ specific pages or directories which we do not wish to block and which feature upper case characters.

User-agent: *

Disallow:*A

Disallow:*B

Disallow:*C

Disallow:*D

Disallow:*E

# Etc. You get the idea.

Allow: /seo/Blog-post/

Note: This will block all URL’s which feature any upper-case letter – not just duplicate pages. You must always be sure there are no unique pages which might be accidentally blocked as a result of this.

4) Where page filters and session ID’s add query parameters to the page URL.

Eg. https://www.searchlaboratory.com/ and https://www.searchlaboratory.com/?sessionid=14576938&pageid=2

Query parameters often result in duplicate pages with different URL’s. We can effectively block Google from crawling any page URL with query parameters by disallowing them within robots. This can prevent multiple versions of the same page being indexed and cut down duplicate content. Again, the wildcard operator can come in handy here:

User-agent: *

Disallow: *sessionid

Disallow: *pageid

Care should be taken so as not to block query parameters which truly indicate a unique page.

5) Where site content is segmented onto multiple pages (paginated) and each page has duplicate Title tags, Meta Descriptions and very similar content.

Eg. https://www.searchlaboratory.com/list-of-clients/ and https://www.searchlaboratory.com/list-of-clients/page-2/

Pages such as these can cause issues with duplicate content. If it is too difficult for your site to implement the rel=next and rel=prev solution, consider blocking all but the first page using your robots.txt file:

User-agent: *

Disallow: /list-of-clients/page*/

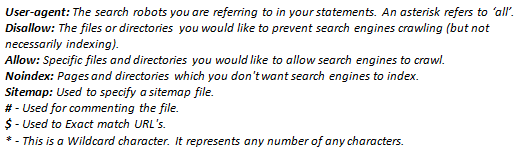

All in all, solutions to these kinds of issues require imaginative thinking. These are based on being able to find patterns in your instances of duplicate content. If there is a specific string, character or even letter-casing which applies only to your duplicate content then you can be sure that it can blocked within your robots file. Use the key above to ensure you use your robots file to its fullest.

Note that the ‘Crawler Access’ tool within Google Webmaster Tools is great way to check whether your robots.txt file is working properly.