A healthy website is always a desirable starting point for search engine optimisation. If you are a site owner you can use Google Webmaster Tools which is a free tool by Google that can help you to configure and spot issues on your site. It’s through this tool that Google will contact you about any issues relating to the site. These messages include:

- Increases in not found errors

- Googlebot cannot access your site

- Big traffic changes for top URL

- Possible outages

- Notice of suspected hacking

- Unnatural link warnings

You can see there are a number of very important messages there, so keeping an eye on these is crucial.

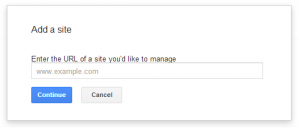

To start using Webmaster Tools there are only a few simple steps.

- Setup a Google account using the email address you wish to use to sign into WMT

- Sign into WMT

- Click add a new site

- Enter your home page URL then press continue

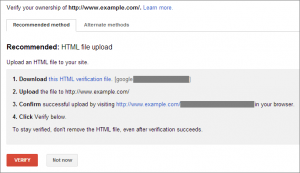

- You will now be presented with options to verify ownership of the site.

- a. If you choose the HTML verification file method you simply upload this to the root directory, click the link to confirm it’s been uploaded correctly then click verify – very simple

- b. If you click on the alternate methods tab there are 3 further options:

i. HTML tag – you simply place a snippet of code into the HTML of your home page

ii. Google Analytics – if you already use GA then you can verify your site in WMT. For this to work though you need to have administrator privileges in GA and also be using the asynchronous tracking code.

iii. Domain name provider – if you have login details for your domain name provider then as long as the feature is available you can add a new TXT or CNAME record. Some companies will have a domain registrar verification tool which can be used instead of adding one of the new records mentioned above.

Once you have your site verified it’s time to start configuring your site and looking for errors.

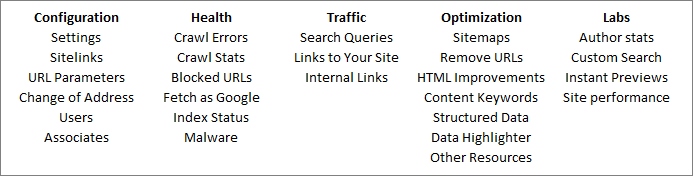

There are a number of pages to look at here. The image below shows each one:

The table below will cover each page and some brief notes on what each feature does and how it can be used to aid the health of your site:

| Category | Page | Description |

|---|---|---|

| Configuration | Settings | Within this page there are 3 settings you can change – geographic target, preferred domain and crawl rate. Geographic target allows you to specify which country your target audience resides in. Changing this to UK can have a positive ranking effect in Google.co.uk but may have a detrimental one in Google.com, Google.de, Google.fr etc. If you want to target UK users only then it’s wise to select UK, whereas if you want to attract worldwide users it’s best not to specify a geographic target. If you had a .com domain with multiple sub-folder, you could add all these as new sites and geotarget each one individually. The preferred domain option allows us to display URLs with or without the www. I tend to select www, just because I like it! In reality it doesn’t matter as long as both versions are handled correctly with 301 redirects or canonical tags to avoid duplicate content. The crawl rate feature lets you limit Google’s crawling of your site – in the main this is left alone and set to its default Let Google optimize for my site. |

| Sitelinks | If you search for your brand name you will generally see sitelinks underneath the root – these are links to deeper pages which Google see as important enough to pull into a SERP. Sometimes Google displays links you may not want to show so it’s here where we can demote sitelinks. | |

| URL Parameters | URL parameters are parts of a URL that are shown after a question mark. They can be used for filtering and tracking but can also cause duplicate content issues. If Google has discovered any parameters whilst crawling your site, they will be displayed here. If there are any displayed you can specify to Google how they should be handled by clicking edit then selecting the appropriate options. Changing settings here can have a massive effect on the number of URLs being indexed by Google so we recommend changing anything here only if you know how parameters work and the effect your changes will have. | |

| Change of Address | This feature is used as part of the domain migration process. When the site is live on the new domain and all the 301 redirects are working we can tell Google that the site has moved. To do this though both the old and the new sites need verifying in WMT. | |

| Users | Here you can grant other users access to your data. If you give them restricted access they can only see the data whereas giving them full access would allow them to make changes. | |

| Associates | An associate is a trusted user who can perform tasks relating to other Google products but can’t see any data or make any changes in WMT. | |

| Health | Crawl Errors | Here we can see any crawl errors Google has discovered. These could be server errors, 404 pages, soft 404 pages, not followed pages, etc. Have a look through each one and if necessary download the data to mine in Excel. Normally there is a pattern and simple fixes will make a big difference to these numbers. In an ideal world there would be no errors here. |

| Crawl Stats | This report shows activity from Googlebot over the last 90 days, highlighting pages crawled per day, kilobytes downloaded per day and time spent downloading a page. There are no set parameters to work towards here, it’s more useful as an indication of site performance. | |

| Blocked URLs | Here we can see what URLs we have blocked via the robots.txt file. If we see a drop in traffic from a certain date it’s always worth checking this file and settings in WMT in case any pages were blocked by mistake. | |

| Fetch as Google | A very useful tool to see how Google crawls a page. You can see the HTTP response returned, see any linked CSS and JavaScript files and if necessary submit the URL to Google’s index. When you submit the URL you can also specify that you want Google to crawl any linked pages. This is a great feature for new page launches or updates. | |

| Traffic | Search Queries | This section shows data on how many people have searched for your site, what queries they have used to find your site, impressions, clicks, CTR and average position. This data can be mined to help find new keywords or phrases to include in your strategy. Here you can also select filters to look at the data in more detail. Perhaps you only want to see impressions/clicks from the UK or from mobile devices. You can also look at top pages and see which have the most impressions and clicks. |

| Links to Your Site | Shows the latest data on links Google has discovered pointing to your site. This is a great feature for link building teams to see if the links they have worked on have been found by Google. However, the data in here has been known to differ slightly from link tools such as Majestic and Open Site Explorer. It’s great as an indication but should be used alongside other data sources. | |

| Internal Links | Data on internal links – i.e., pages linking to each other within your site. This data can help you find pages with low PageRank and indicate which pages to use in future content campaigns to help the flow of link equity around your site. | |

| Optimization | HTML Improvements | Google shows potential issues it has discovered when crawling your site – pages with duplicate titles, duplicate meta descriptions, short titles, etc. You should look to fix these issues, especially if there are a large number of them. |

| Content Keywords | Shows a list of the most prominent keywords and their variants within your site. This data can be used to help ensure you are targeting the right keywords within your content. | |

| Remove URLs | If you have made URLs unreachable via robots.txt, you can request that Google removes these URLs from its index via this section. |