Keeping PPC accounts performing at their best involves regularly testing the data, analysing the results and applying the learnings. This post specifically looks at how you can optimise and automate ad tests. A/B testing your ads is good practice in any PPC account as there are always tweaks that can be made. Does “save 20%” make more people click than “20% off”? Which of your USPs should you be pushing? However, ad testing can fall by the wayside, especially if there are tasks that are more urgent than downloading all your ads, stripping out the ones you want to test, sticking all the data back together and then finding out the result isn’t significant anyway.

Here at Search Laboratory, we’ve been automating bid decisions for many years now, but we’ve developed a system that can do the same for ad text decisions, called AlertLab. We know the robots can’t write ads for us (“50% off anti-aging cream for your inferior human flesh”), but they have a number of advantages over people:

- Downloads stats on a daily basis without affecting productivity.

- Tests that no ads have been added/removed and the test is still fair.

- Uses objective statistical tests rather than opinions.

- Keeps track of multiple tests over large accounts.

Protection from statistical errors

If designed correctly, automation can protect you from these common ad testing mistakes:

- Not having a fair test.

- Missing ad-group level trends.

- False-positives from low data.

To ensure a fair test we need to keep all variables other than the ad text the same. This means running the ads we want to compare on the same keywords, over the same time period, on an even split. AlertLab makes sure that if an ad in one group gets accidentally deleted, the test is stopped and will not resume until the test is once again fair.

AlertLab also calculates performance at ad-group level so any outliers can be caught. For example, if you have “cheap garden furniture” and “luxury garden furniture” in the same campaign, they might end up in the same test but produce different results.

When manually testing ads, you might be tempted to look at your ads too early and just take whichever is performing best. But low-volume data can easily be misleading, especially if you’re not testing for significance.

In a scientific setting, you would need to keep your data sealed until the end of the test, as the risk of a false-positive rises the more you test the data. AlertLab has the option to add a minimum number of trials, or to test only on the last day of the test. This guards against false-positives. But sometimes in business, you need to make a decision even if you’re not 100% confident. So long as you set the significance threshold higher than 95% and use your judgement, you should be making the best decision.

What exactly are we measuring?

Advertisers often disagree about which metric is best to judge your ads on. Obviously, if you’re testing a landing page then you should use conversion rate. But what about “save 20%” vs “20% off”? Some swear by Impression to Conversion Rate (ICR), but you need a large volume of impressions before the result becomes significant. This is where we need a bit of human judgement to make a decision.

We can set AlertLab to test CTR (click-through rate), ICR or CVR (conversion rate), to fit all business needs and to optimise your ads in line with other KPIs. We use a difference of proportions Z-test with a user-defined significance threshold to determine when a decision can be made.

Why not let Google do it for you?

It’s true that you could just select “optimise my ads for clicks/conversions” and then periodically come back and pause the ads that Google has decided are underperforming. But really, this is like using broad match keywords, or CPA (cost per action) bidding. You can let Google decide if a search is relevant or what your keyword bids should be, but it’s usually preferable to do it yourself or use an automation that you understand and trust.

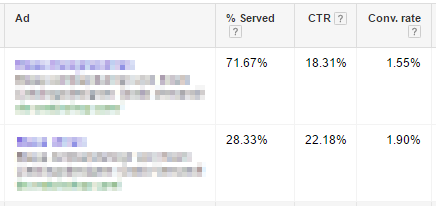

Google can make the wrong decisions. For example, here is data from two actual ads:

Google was showing ad #1 more often, but ad #2 was outperforming it in CTR and CVR. The click-through rate difference had a confidence value of 99.9%. By letting Google optimise our ads, we were potentially missing out on 20% of our conversions.

Another advantage of AlertLab is that you can know as soon as one ad is definitely performing better than another. You can then start a new test using what you’ve learned, and continually improve your ads.

Results

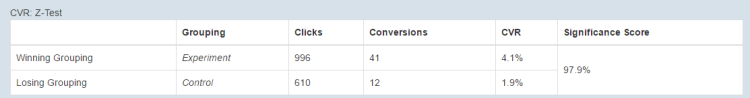

Here are the results from a recent landing page test. We were testing the conversion rate of a newly designed landing page against the current page.

The test ended on day 25 with a significance of 97.9%.

Without AlertLab, we would have waited longer to make a decision, as the initial estimate to gather all the data was six weeks. After we switched completely to the new landing page, conversion volume in this campaign doubled as we were able to allocate more budget to the campaign in light of the reduced CPA.

Automating your ad copy testing frees up time for work that only humans can do, and allows you to access insights about your ads more quickly. This helps to optimise your ad copy, bring in more conversions and – most importantly! – increase your profit. Using our own automation rather than Google’s allows us to properly understand the results and use our own judgment to interpret them. AlertLab is also flexible and provides protection from common statistical errors. In short, you should be considering automation for your ad text testing.

Find out more about your PPC automation options and get in touch with our expert team.