![]() Insights

Insights

There has been a lot of talk about ChatGPT over the past few months, and one of the hot topics is using ChatGPT for copywriting. So, we decided to test it out to understand its strengths and limitations and better understand if and how we could utilise ChatGPT to enhance the content production process.

Our experiments took the following steps:

The first step was to form a working group and nail down exactly what we wanted to test. Simply testing ChatGPT on copywriting is too broad, as the briefs we get into the team vary. So, we collated a list of over 18 types of content that we could be asked to produce; these included company news blogs, press releases for campaigns, product page copy for websites, interviews, product buying guides, and category page content. We then factored in the different nuances of writing B2B and B2C copy and ensured our list contained variants of both when relevant to test any nuances.

Once we had the list of content types, we collated the human-generated content and then built prompts to instruct ChatGPT based on the briefs we typically get into the team for each piece to help replicate an AI version.

Once these were ready, we ran each prompt through ChatGPT three times. Once on temp 0, once on temp 50, and finally on temp 100 to help understand the different outputs produced from the different temp settings.

This gave us four variations of the content to test; one created by humans and three created by ChatGPT at the three different temps (0, 50, and 100).

As a working group, we reviewed the initial outputs of ChatGPT and made some notes around the quality of its outputs that covered:

This gave us a starting point for whether we would be happy to deliver this content to clients and wider teams.

Because we knew which content was written by humans and which was written by AI, and because we hold a bias (we’re part of the content team, so will always advocate that content is written by humans), we then blind-tested the content with a wider group of people who weren’t part of the working group and who weren’t aware which content was AI and which was written by humans. These people were selected to review the content based on their knowledge of our clients and their involvement as stakeholders in our usual content sign-off process. These people would essentially be gatekeepers for getting our content live or sent to clients.

As well as assessing the quality of the content, this group also looked at whether they’d use the content they’d been sent (either by putting it live or sending it over for sign-off from a client).

Although surprised by how quick and legible the AI-generated content from ChatGPT was, it was easy for the people in the testing group to identify it as AI-generated.

What people liked about ChatGPT and its outputs was the speed at which it delivered information. This made it useful for structuring content, doing light research for ideas and tips, and adding in ideas that previously we may not have considered. For example, it suggested adding warranty information to a product page.

Despite this, the flaws with ChatGPT were obvious, and everyone in the testing group flagged the following flaws:

Once we had all the results from human tests. The next thing we did was to use AI to assess if it could work out if a piece of content was written by a human or by AI and whether any of the content was considered plagiarised.

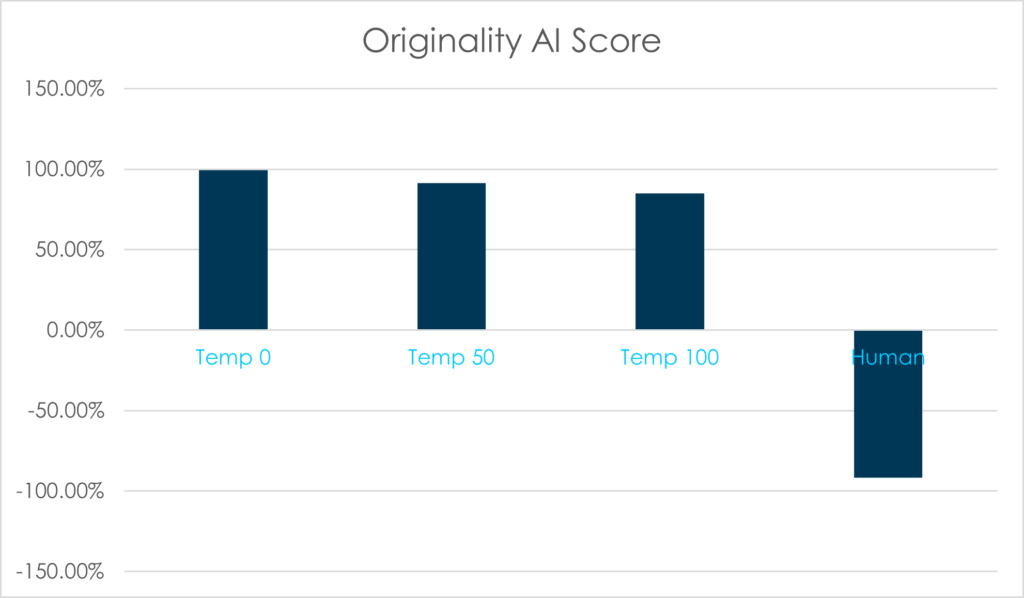

To run these tests, we used Originality. Our research showed Originality as a leading tool for accuracy and allowed us to test for both areas simultaneously. We tested all four examples of each type of content with Originality to understand its outcomes for human-generated and AI-generated content.

All the AI-generated and people-generated content scored zero for plagiarism, meaning that none of the information was considered plagiarised.

On average, the AI content scored 91.9% for AI-generated within Originality across the three temps. Whereas the human-generated content scored an average of -91.75% as human-original, proving that Originality could quickly and accurately detect which content was AI-generated, and which was written by humans.

As the temp number Increased, the likelihood of Originality detecting that the content was AI-generated decreased very slightly. However, it was still obvious to Originality that temp 100 content was AI-generated, as it scored an average of 84.9% AI-generated.

Both humans and AI could recognise when content was AI-generated. As the temp of the ChatGPT content increased, detecting that the content was AI became slightly harder for Originality. However, at higher temps the quality reduced, making it easier for humans to detect.

ChatGPT proved good for research, helping with structure and writer’s block. This led to time efficiencies with content production.

The main flaws with ChatGPT were around quality, how factually correct the information was, and its lack of understanding of a company, its brand, and its history.

Based on its flaws, ChatGPT is not replacing human copywriters right now, and we would never recommend publishing any content from the tool without human input.

There are some content briefs that ChatGPT just would not be relevant for, for example, written interviews. For everything else, ChatGPT proved useful for research, ideation, and structure. It cut down time for things like Digital PR tips pieces. That said, research with ChatGPT must be done with a large pinch of salt and it cannot yet be relied on for facts and statistics.

We will continue to test AI for use in content creation, and for wider use across the agency. The pace of development of this technology is rapid, so we expect improvements will come very soon.

As ChatGPT grows as an effective tool to aid content creation, so does generative AI’s impact on SEO. In our Future of SEO report, we analyse important challenges affecting organic search and deliver actionable guidance on how to overcome them.

Our Future of SEO report, authored by our Head of SEO and Director of SEO, helps marketers develop future-proofed solutions that will enhance their SEO strategy, enabling them to achieve sustainable SEO success.

![]() Insights

Insights

![]() Insights

Insights

![]() Insights

Insights